Linear Regression with All the Steps

Common misconceptions, implicit assumptions, and missed steps in linear regression.

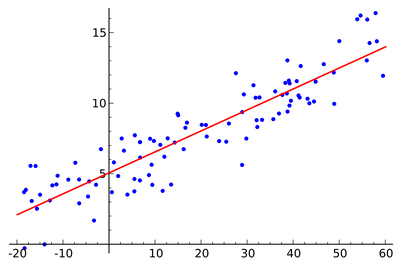

Linear regression is kind of a beautiful problem. I wanted to point out a few details that a lot of people miss and provide the derivation of the closed-form solution without skipping any steps.

Problem Set-up

We’re given a dataset of N points (xi,yi), where xi is a D-dimensional feature vectory and yi is a 1D scalar. In this case, y(x) is a random variable. We want to build a model such that, given some new inputs x, we can predict the mean output y^(x).

Our model hypothesis is that y is linear in w, not necessarily x, which is a common misconception:

y^(x)=j∑Dwjfj(x)+w0where wj are elements of the weight vector w and fj(x) is a (potentially non-linear) function applied to x. For the rest of this problem, we’ll assume f(x)=x for simplicity. For the convenience of matrix operations, we’ll roll the bias term inside w by prepending a dimension to x and defining:

f0(x)≡1;x0=1D=D+1Note that we are making a number of assumptions about the data and errors here - see this great Stats StackExchange answer for more details.

Loss Function

We want to choose model parameters w to fit the data well. To do this, we can minimize an error function that is larger if the model fits the data more poorly. We use the sum of squared errors as our loss function for a few reasons:

- The resulting function is smooth and differentiable, which is easier to optimize

- The errors from overshooting some amount are the same as undershooting by that amount

- The least squares solution gives us the maximum likelihood estimator (MLE) if the noise is an independent Gaussian (yi=wxi+ϵi,ϵ∼N(0,σ2))

Matrix Form

Before we dive into the solution, we’ll rewrite the problem formulation and loss function in matrix form, where Y^ is an Nx1 vector, X is a NxD matrix, and w is a Dx1 vector.

Y^=XwL=(Y^−Y)T(Y^−Y)=(Xw−Y)T(Xw−Y)Closed Form Solution

Now we can solve for the w that minimizes our loss function by taking the first derivative of the loss function L with respect to w and setting it equal to zero. This leads to our global minimum because the least-squares loss is convex.

∂w∂L(Xw−Y)T(Xw−Y)=0Before taking the derivative, we can expand out the product.

∂w∂L(wTXTXw−wTXTy−yTXw+yTy)=0Recall our matrix derivative properties (proofs):

∂x∂(xTAx)=(A+AT)x=2Ax(1)∂x∂(xTA)=A(2)∂x∂(Ax)=AT(3)Note that (A+AT)x=2Ax if A is symmetric.

Take the derivative. For the first term, use property 1 where A=XTX is symmetric (proof). For the second term, use property 2 where A=XTy. For the third term, use property 3 where A=yTX.

2XTXw−XTy−(yTX)T=02XTXw−2XTy=0w=(XTX)−1XTyThe solution w=(XTX)−1XTy is also known as the normal equation.

Discussion

Note that XTX may not be invertible if:

- There are too few examples for the number of features: N < D

- It is singular (by definition), i.e. the determinant is zero

- The columns are linearly dependent; there are redundant features

Here I focus on the closed-form Ordinary Least Squares solution, but it’s also important to understand the MLE, SVD, and gradient descent approaches. MLWiki has a great compact summary.

Further Reading

I really like the book Numerical Optimization by Jorge Nocedal and Stephen Wright. I recommend reading at least Section 2: Fundamentals of Unconstrained Optimization.